Increase The Scale Of Your Web Scraper Using Proxies

by Arnab Dey Technology Published on: 09 March 2023 Last Updated on: 08 April 2024

Web scraping is a legitimate method of gathering current market information. Also called data mining, it’s a process where a program accesses dozens to thousands of websites and collects public data from them.

However, that doesn’t mean there are no challenges. Proxies or a Google Search API from a provider like Smartproxy can bypass most of the challenges. However, first, you must understand these obstacles. Keep reading to discover how to scale your scraping efforts using proxies.

Challenges of Web Scraping

The website might not be blocking data mining specifically, but rather a large volume of requests originating from one IP address. These blocks are to prevent automated fuzzing or other hacking attempts.

As such, many websites have rate limits in place. The average person can make around 300 to 600 requests on a website in an hour. As such, it’s usually safe to scrape if you stick to 500 requests from one IP address. However, if you’re looking to develop a fast scraper with proxies, you can effectively bypass these limitations by rotating through a pool of proxies, distributing requests across multiple IP addresses to avoid triggering rate limits and ensuring efficient data extraction. This allows you to scrape at a higher speed while reducing the likelihood of encountering blocks or flags from the target website.

Another challenge is geo-restrictions. Many websites will ensure their site is only visible to people in a specific geolocation. As such, if you want to expand into a new market, you’ll have trouble accessing the competitor’s sites and seeing local market offers.

Benefits of Proxies

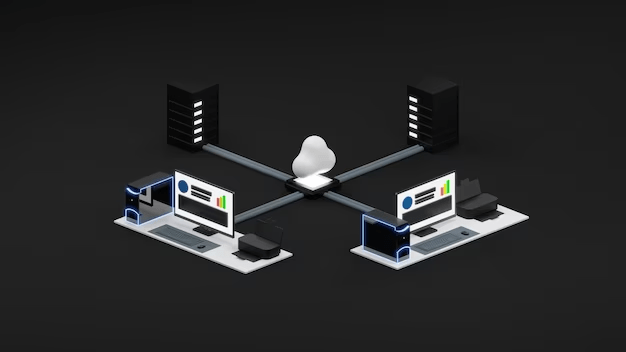

Proxies are an excellent tool that can help you bypass these challenges. A proxy is a server that stands between you and the website you’re accessing. In basic terms, you send a request to the proxy, which sends it to the website. When the website responds, the proxy server relays the response back to you. In that way, you never have to interact with the website directly.

It conceals your IP address from the site and ensures no sensitive information is visible. Since the server hides your IP address, the target website can’t block it. Using a proxy means you can change the IP address the website sees and so avoid the rate limits. Before the program identifies an IP address as suspicious, you can switch to a new one.

You can also set the geolocation of the IP address to a new market, meaning you can access geo-restricted content just like the locals. Websites geo-block their sites for various reasons. However, it’s never been easier to see what competitors offer or to enter a new market for your business. The proxies for scraping are crucial if you want to scale your web scraping to deliver more accurate information faster.

Best Proxy Choices

Various proxy types are available, but not all are equally suitable for data mining. Below, we consider three types that are the best for data mining.

Residential

Residential proxies use the IP addresses of actual home devices to ensure your data scraper looks like a real person. In this way, you have thousands of home IPs available for your use and will rarely, if ever, be blocked from accessing a website. So optimize a reliable residential proxy.

It’s also more secure, with none of the risks that free or data center proxies present. Residential proxy types are some of the best for your data mining, letting you access any location and website.

Rotating

Rotating proxies are a type of residential proxy that creates a new IP address with every request. That means it’s ideal for heavy-duty web scraping, where you’re accessing thousands of websites. It’ll ensure a new, reliable residential proxy for each connection, bypassing geo-restrictions, and website blocks.

As you’re constantly changing proxies, you also don’t need to worry about rate limits and can appropriately scale your data miner to suit your needs and gain market research. Using a rotating proxy also spares you from tedious proxy management when scaling your scraping efforts.

Google Search API

One of the best tools to use is the Google Search API. This tool combines a scraper, parser, and proxy in one. It lets you retrieve Google search results and images with a single API request. It’s the best of both data miners and proxies combined, creating one streamlined program.

You have access to over 40m+ quality proxies, a trustworthy SERP scraper, and a reliable data parser that ensures your collected information is relevant and readable. You can learn about keyword rankings, improve your SEO, and find organic Google search results.

This program is a ready-to-use tool, meaning anyone, no matter your tech and coding knowledge can use the program. It integrates with your systems effortlessly, offers premium extracted data, and is unrivaled in the market.

Scale Your Web Scraper

Proxies have a significant pool of IP addresses at your disposal, with unlimited concurrent threads empowering web scrapers and scaling them to your needs. Web scraping has become a vital part of a successful business model or marketing strategy, and proxies ensure you can complete the process with minimal delay and enhanced security.

Read Also